How close are we to the Apple AR headsets?

A small round-up and thoughts about the Apple ARKit framework in 2021

I cannot believe it is May already!

As an iOS developer, this means mostly one thing, we are getting closer to WWDC!

What will be awaiting us this year? I believe that more SwiftUI innovations are around the corner, but I am excited about new features and API's for Apple's Augmented Reality framework too.

I believe also that AR will really get traction once the glasses are announced.

There are some rumours following this press release in January that something is possibly moving in direction glasses.

If not this year perhaps next year? I believe we are closer than we think.

Apple this year announced Dan Riccio will transition to a new role focusing on a new project and reporting to CEO Tim Cook [ ... ]- Apple Press Release (January 25, 2021)

I still don’t know which project Riccio is heading, but my guess is that it’s the headset, not Titan, simply because I’m certain the headset is closer. I think it’s a sign that the headset is ready to get real, and Apple wants someone as capable as Riccio to lead it with nothing else on his plate. - from Daring Fireball

Is this a sign that the headset is ready to get real? Speculations on the net abound on this one. My take too is that the headsets are closer than you think!

So it is a good moment to review the state of AR. I will do a refresher of what happened previously in AR (at the Apple side in iOS mostly) until the latest addition to the platform, which is the LiDAR sensor.

A roundup

ARKit

ARKit is a framework that allows developers to create augmented reality apps and has been available to developers as part of Xcode 9 since its beta release at WWDC '17 in June 2017. ARKit apps have been available to consumers on the App Store with the release of iOS 11.

ARKit provides scene understanding and persistence. To achieve markerless tracking, ARKit creates and manages its map of the surfaces and feature points it detects and can also load and save world maps.

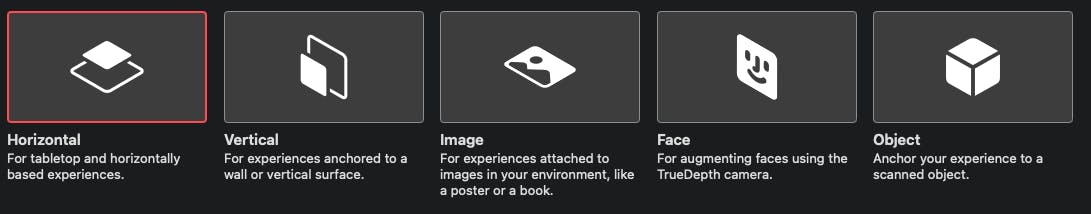

ARKit recognises object of interest, feature points, as anchors, allowing you to place virtual content on various real-world surfaces. Already with the release of ARKit 1.5, it was possible to recognise not only horizontal surfaces like floors, ceilings, tables and chairs; but also vertical surfaces like walls, images including photos and posters; and faces and objects like toys and consumer products.

Later releases added better recognition of planes and images, and the capability to detect occlusion. Models get better with lighting and shading and the camera is able to detect the surrounding better and faster.

The hardware limitations

- At the very minimum, you can use AR in your Apps for devices with an A9 processor and higher.

- Face tracking needs at least the A12 bionic chip.

- An iOS device with a front-facing TrueDepth camera is a must if you want to play with Memoji!

- And of course, you need a Lidar for some of the latest features as explained below.

Some cool ARKit features

Motion Capture:

Capture the motion of a person giving the developer access to a skeleton representation of the human body including a very detailed map of all the joints. it's also powered by apple's neural engine.Collaborative sessions:

Create shared AR experiences with live collaborative sessions between multiple users building different collaborative world maps between multiple people.ARcoachingUI:

To easily guide an air experience that you guide the users on how to scan the environment how to move maybe even messages about the lighting conditions and this is a built-in user interface overlay that you can use you don't have to implement it from the start it's there for youARQuickLook:

At WWDC 2018, Apple introduced AR Quick Look. It provides a very powerful augmented reality experience with user interactions like moving and scaling the object, supports people occlusion and even sharing the model. It supports the USDZ (see below) and also the .reality (from Apple's Reality Composer App) file formats.USDZ:

Along with QuickLook Apple introduced a new USDZ file format originally developed by Pixar to improve the graphics and animation workflow pipeline of large-scale animation productions.

The added Z merely indicates that it’s a ZIP archive. The technology behind it is called Universal Scene Description (USD). This is a universal format to exchange 3D content. USD is not only a universal 3D file format. It’s a C++ library that can read and write USD files with Python bindings. There are three file extensions mainly associated with USD: • USDA: Plain text file designed to be human-readable and easy to understand. • USDC: Binary version of the USDA file, designed to be as efficient as possible. • USD: Can be either a text file or a binary file.Light estimation and Visual Coherence:

ARKit analyses the active video feed frame data to generate a real-time environment map estimating the environmental lighting conditions, and then if your virtual content uses physically-based materials, your content will blend into the real-world environment more realistically and will have realistic-looking reflections. Also for more realism, it will adjust the focus of the objects, provide some motion blur if needed and add camera grain to match the camera view.

What is LiDAR for and why we need it.

LiDAR stands for "Light Detection And Ranging". We had Sonar and Radar before, which implemented a similar system for sound and radio waves. LiDAR works with light and it can measure the distance to surrounding objects up to 5 meters away. It works both indoors and outdoors and allows instant placement of AR objects in the world. Thanks to Lidar we get improved object occlusion rendering and also the depth API, which gives developers access to per-pixel depth information about the surrounding environment.

Only the new iPad Pro and iPhone Pro models support LiDAR. It is therefore considered a pro feature at this moment. More people use their camera to take photos that to experience AR so the importance to have LiDAR seems marginal at present. This feature has been met by a "meh" reaction by many reviewers.

Daring Fireball writes about the new iPad Pro 2020:

Hardware-wise, iPad Pro got a very unusual “speed bump” update early in the year, adding one extra GPU core and a LiDAR sensor on the camera system that doesn’t seem to have many practical uses.

Let's face it, he is right. There are no practical uses of LiDAR on the iPad Pro. Unless you are interested in playing with AR! In Lidar the laser beams form a quite wide mesh and it will not help with the detection of small objects, but only with LiDAR, you can get effects like the interaction of objects with complex surfaces which are not flat like a plane. Look below in the image how the Lidar mesh looks like and how tossed the objects are distributed and positioned on the steps.

This is only possible because of LiDAR, creating a self-updating mesh giving the location of objects and boundaries and allowing these to have a physics property! Previously we had to get an anchor and create a plane for each of these points!

This does look deceptively simple. However, it is a huge deal!

A recent addition: App clips and AR

I did not see this in the wild yet but it has potential and is a very nice addition to the App Clips feature introduced by Apple at WWDC20.

App Clips allow the user to run a simplified version of your app directly from the internet and can be launched when the user needs it, usually from a special QR code, NFC tag or a link.

For example, a user can scan an App Clip Code on a box containing a retail product. The App Clip displays a virtual 3D rendition of the item inside the box, so the user doesn't need to open the box to see what's inside. - From the Apple Documentation

A last thought

This sentence made me think. Wait a moment!

[With AR] we're able to bring the real world into your virtual one. - Saad Ahmad Reality Kit engineer Apple WWDC20

When Saad said this during the WWDC presentation, I had a light bulb popping up in my head. It is true and I realised that contrary to we may think AR is not bringing virtual reality into the real world. The real world remains untouched! :)

It is the opposite! We bring the real world into your virtual one.

A programmer will experience first-hand, that we use the camera to bring reality to our virtual world and not the opposite. It is obvious but an interesting point.

Sources.

There is a nice roundup of MacRumors about the Apple AR and eventually VR projects:

https://www.macrumors.com/roundup/apple-glasses/

Apple Press Release :

https://www.apple.com/newsroom/2021/01/dan-riccio-begins-a-new-chapter-at-apple/

Apple Human Interface Guidelines - Augmented Reality:

https://developer.apple.com/design/human-interface-guidelines/ios/system-capabilities/augmented-reality/

What's new in RealityKit - Apple WWDC20:

https://developer.apple.com/videos/play/wwdc2020/10612/

Pokemon Go early AR reference:

https://apps.apple.com/us/app/pokemon-go/id1094591345

Download 3D Models:

https://sketchfab.com

USDZ Tools: https://apple.co/36TN9WJ

See Roxana's Video about AR with lots of nice slides on youtu.be/

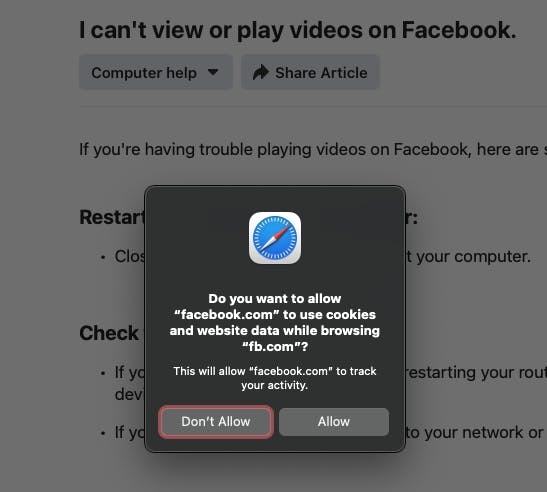

Looking at the Facebook Project Aria for comparison:

https://about.fb.com/realitylabs/projectaria/

I am sorry FaceBook but no I will not allow you to collect all my data. I only wanted to check the Apple AR glasses competition :)

More about Lidar on Wikipedia